Date:

October 29, 2025

Category:

AI in Customer Service: Smarter Conversations or Just Smarter Bots?

The Cost of Automated Frustration

Every organization is eager to apply artificial intelligence (AI) to improve customer interactions. According to recent industry analysis, 85% of customer service leaders are either piloting or planning the use of generative AI (GenAI) to restructure service delivery. The intended goal is clear: utilize smarter bots to handle routine inquiries, achieving speed and scale.

Yet, customers frequently share a painful reality: being trapped in circular chatbot loops, forced to repeat information, or, worse, receiving confidently presented but incorrect answers. The pursuit of efficiency often comes at the expense of user experience (UX).

Data confirms this operational disconnect: nearly one in five consumers who use AI for customer service report finding no value in the experience. This represents a failure rate four times higher than for other AI applications. The central question for executives today is whether current AI deployment truly delivers smarter conversations built on accountability, or if it merely introduces more sophisticated failure points.

Smarter Bots: The Efficiency Dividend and Its Critical Liabilities

The recent progress in GenAI is undeniable. Advanced bots, powered by large language models (LLMs) and natural language processing (NLP), can now move far beyond simple frequently asked questions (FAQs). They understand free-form queries, adjust conversational tone, and leverage company knowledge bases to deliver natural, engaging experiences.

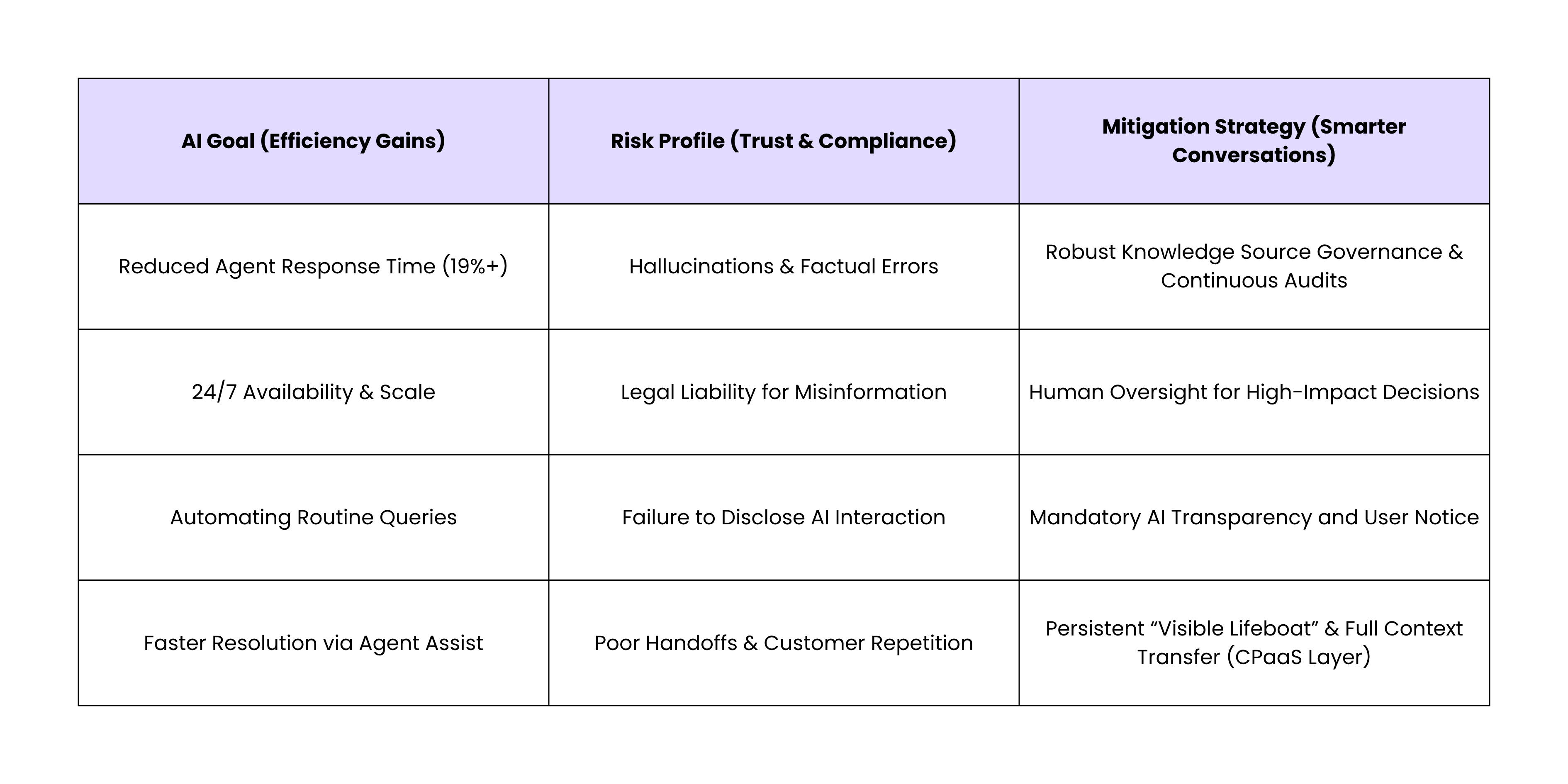

For businesses that successfully integrate these tools, the results include measurable gains, such as a reported 19% reduction in response time. This frees human agents to focus their expertise and empathy on the complex, unique customer tasks that genuinely require human creativity and judgment.

However, the technical capability to generate novel text, the very feature that enables conversational fluency, introduces a severe exposure: AI Hallucinations. These are responses generated by the AI that sound entirely plausible but are factually baseless or fabricated.

The fundamental issue is that this technical limitation instantly transforms into a legal and reputational risk. If an AI provides misinformation regarding safety protocols, financial product terms, or regulatory guidance, the business incurs direct liability.

Providing incorrect regulatory guidance can lead to compliance violations, lawsuits, and financial penalties. Furthermore, errors that pertain to legally protected classes or groups risk liability under anti-discrimination statutes.

Governing conversational AI therefore requires leadership to treat system accuracy and accountability as technical mandates, not optional additions.

AI in CX: Balancing Efficiency with Risk

The Foundation of Trust: Governance and Transparency

Conversational AI relies heavily on processing personal data, ranging from basic identity information to purchase histories and behavioral patterns. Compliance with stringent global privacy regulations, such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), is non-negotiable. Organizations must implement robust data governance and security hardening practices, ensuring that this sensitive data is protected.

Crucially, compliance is the infrastructure upon which customer trust is built, not merely a regulatory checkpoint. The commitment to transparency is paramount: users must receive clear, explicit notice when they are interacting with an AI system rather than a human agent.

This ethical approach prioritizing transparency, fairness, and explainability, yields commercial benefits. Companies that commit to using AI ethically are 85% more likely to earn customer trust, which in turn fuels long-term loyalty and enhances market access.

Furthermore, accountability must extend beyond internal systems to vendor management. Companies must negotiate Data Processing Agreements (DPAs) and confirm that third-party AI vendors are not utilizing proprietary chat data to train their models without explicit, auditable consent from both the brand and the customer.

Regular audits are necessary to detect and correct algorithmic or data bias, preventing the system from producing unfair or unequitable outcomes for specific customer demographics.

Engineering the Conversation: UX and the Human Handoff

The successful hybrid model technology for efficiency, humans for empathy, hinges on the quality of the transition between the two actors. If the human handoff fails, any efficiency gains realized by the bot are instantly negated, leading to severe customer frustration and ticket escalation.

To engineer a successful conversation, two principles must govern the interaction design. First, the "visible lifeboat" principle dictates that users should never feel trapped by the bot. A persistent, visible, and easily accessible option to escalate to a human agent must be available at all times.

Moreover, the bot must be intelligent enough to recognize its limits, escalating the conversation based on clear triggers, such as detection of negative sentiment, high complexity, or repeated failure to understand the query.

Second, context preservation is non-negotiable. When the handoff occurs, the system must transfer the entire conversation transcript and all gathered user context to the human agent.

Agents must be equipped and trained to quickly review this context, potentially using AI-generated summaries, allowing them to continue the dialogue immediately without asking the customer to repeat information already supplied.

A warm transition that preserves context ensures the customer feels heard and valued, securing the ultimate goal of a faster, better resolution.

Actionable Strategy

Scaling conversational AI requires executive teams to shift their focus from maximizing the volume of automated interactions to engineering smarter, more accountable conversations. The commitment to compliance and context preservation is what differentiates a disruptive technology from a damaging liability.

Organizations must prioritize the infrastructure that supports ethical deployment, legal accountability, and flawless customer journey flow.

To navigate the intricacies of AI disclosure requirements, robust data governance, and high-stakes conversational management, businesses must select agile and compliant communication infrastructure partners.

Signalmash provides custom communication solutions built for AI innovators, offering intelligent messaging and real-time voice automation tools. By integrating critical compliance features and providing scalable, white-label infrastructure, Signalmash empowers organizations to foster customer trust while minimizing reputational and legal risk.

Ready to design compliant, human-centered customer conversations?

Connect with Signalmash, the boutique CPaaS partner helping brands blend AI efficiency with genuine customer care. Visit www.signalmash.com or message us on LinkedIn to start the conversation.

Tags:

AI

Business

Communications

Technology

Hi! I’m one of The Mashers at Signalmash

If you want to discuss your SMS & voice needs, we’re available! Use the form below to leave your details or set a 15 min call.